ML Interview Question: Hyper-parameter optimization

Answers

-

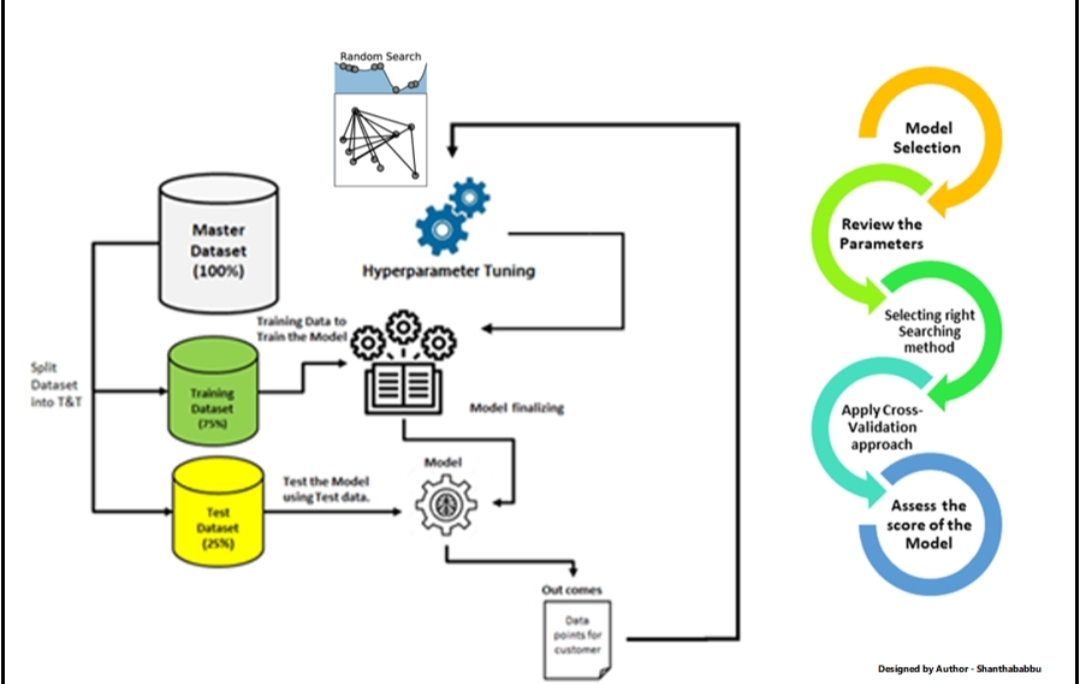

In machine learning, hyperparameter optimization or tuning is the problem of choosing a set of optimal hyperparameters for a learning algorithm. A hyperparameter is a parameter whose value is used to control the learning process. By contrast, the values of other parameters (typically node weights) are learned.

-

Hyperparameters are different parameter values that are used to control the learning process and have a significant effect on the performance of machine learning models.

An example of hyperparameters in the Random Forest algorithm is the number of estimators (n_estimators), maximum depth (max_depth).

Hyperparameter optimization is the process of finding the right combination of hyperparameter values to achieve maximum performance on the data in a reasonable amount of time.

Techniques to achieve Hyperparameter optimization:-

Grid Search:-Grid search works by trying every possible combination of parameters you want to try in your model. This means it will take a lot of time to perform the entire search which can get very computationally expensive.Random Search:-This method works a bit differently: random combinations of the values of the hyperparameters are used to find the best solution for the built model.The drawback of Random Search is that it can sometimes miss important points (values) in the search space.

**

Bayesian Optimization:- **Bayesian optimization belongs to a class of sequential model-based optimization (SMBO) algorithms that allow for one to use the results of our previous iteration to improve our sampling method of the next experiment.